Bgroho Insights

Your daily source for news, tips, and inspiration.

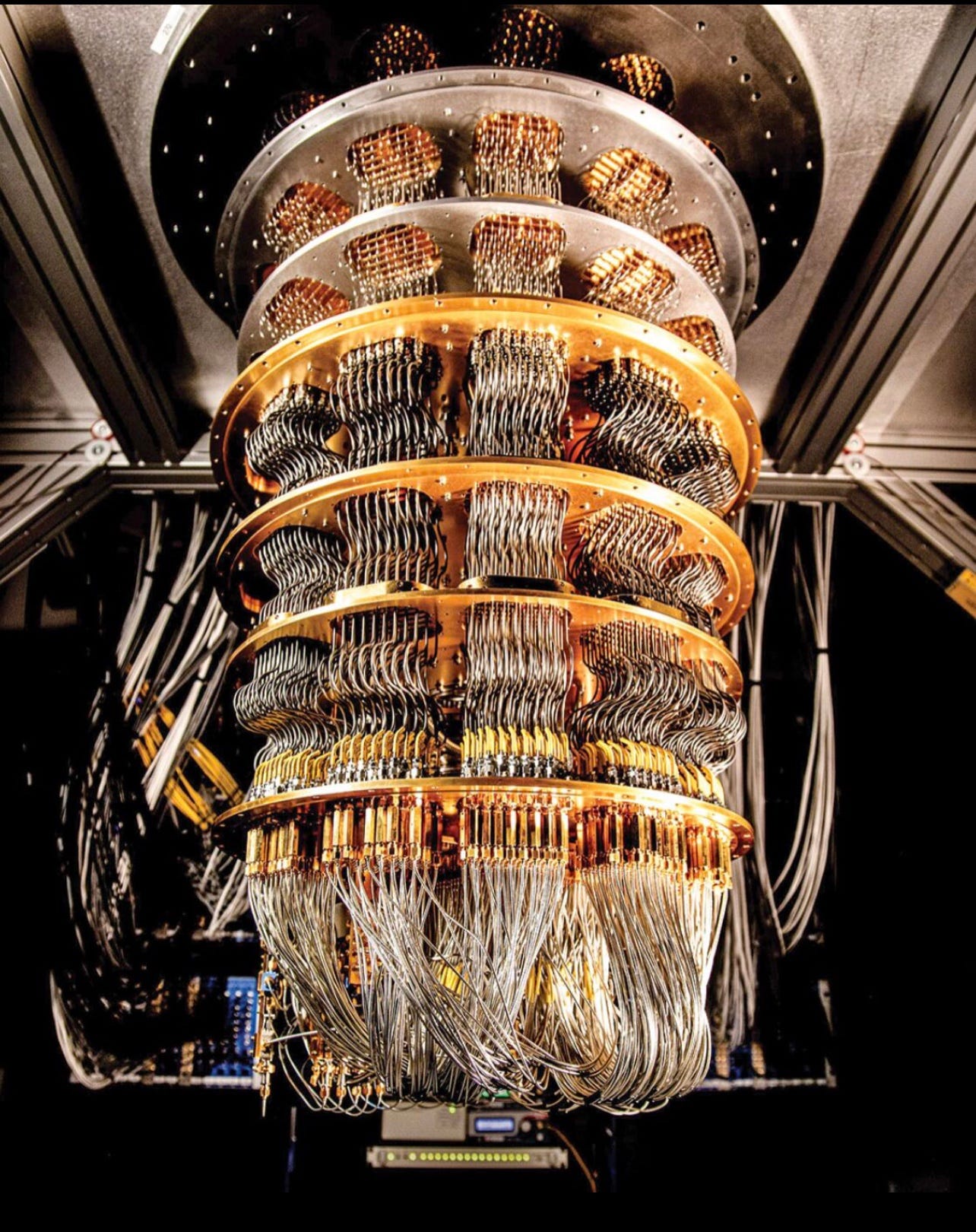

Quantum Computing: The Future or Just a Fad?

Discover if quantum computing is the groundbreaking future or a fleeting trend. Explore the hype, possibilities, and reality behind the buzz!

Understanding Quantum Computing: Principles and Potential

Understanding Quantum Computing begins with grasping its fundamental principles that differentiate it from classical computing. At the core of quantum computing are quantum bits or qubits, which can exist in multiple states simultaneously, thanks to the principles of superposition and entanglement. This capacity allows quantum computers to process vast amounts of data in parallel, offering the potential for solving complex problems that would be infeasible for traditional computers. The fundamental operations of quantum computing leverage quantum gates, which manipulate qubits in a way that can drastically enhance computational power.

The potential applications of quantum computing are both exciting and transformative. Industries such as finance, pharmaceuticals, and cryptography stand to benefit significantly from this technology. For instance, quantum algorithms could revolutionize optimization problems, financial modeling, and drug discovery, leading to unparalleled efficiencies and breakthroughs. As research progresses, it is imperative to stay informed about the developments in quantum computing, which promise to shape the future of technology.

Is Quantum Computing the Key to Unsolvable Problems?

Quantum computing represents a significant leap forward in computational capabilities, enabling the potential to tackle unsolvable problems that have perplexed scientists and mathematicians for decades. Traditional computers, which rely on binary bits for processing, face limitations when attempting to solve complex problems characterized by enormous datasets or multifaceted variables. Quantum computers use quantum bits, or qubits, allowing for a more intricate approach to problem-solving through phenomena like superposition and entanglement. This revolutionary technology offers the promise to transform industries and advance research by solving problems currently deemed computationally infeasible. For further reading, visit Scientific American.

One of the most discussed applications of quantum computing is in optimization problems, which are crucial in fields like logistics, finance, and pharmaceuticals. Traditional algorithms are often unable to efficiently find the best solution among a vast number of possibilities. Quantum algorithms, such as the Quantum Approximate Optimization Algorithm (QAOA), can explore these possibilities more effectively, revealing insights that could lead to breakthroughs in various disciplines. While we are still in the early stages of quantum development, the potential impact on areas such as cryptography and artificial intelligence cannot be overstated. For more insights, check IBM's Quantum Computing Basics.

Quantum Computing vs. Classical Computing: What's the Difference?

Quantum computing and classical computing represent two fundamentally different approaches to processing information. While classical computers use bits as the smallest unit of data, represented as either a 0 or a 1, quantum computers utilize qubits, which can exist in multiple states simultaneously thanks to the principles of superposition. This ability allows quantum computers to perform complex calculations much faster than classical computers for certain problems, such as factoring large numbers or simulating quantum systems. The distinction between these two types of computing can greatly impact fields ranging from cryptography to drug discovery.

Despite their potential, quantum computing is still in its infancy, and there are numerous challenges to overcome, including error rates and qubit coherence times. In contrast, classical computing has a well-established foundation, powering everyday devices and applications. As we explore the differences, it's important to recognize that both paradigms may coexist, as quantum computers can complement classical systems rather than replace them entirely. For an in-depth understanding of these technologies, consider reading more on ScienceDirect.